Tesseract XR: A Cognitive Interface for Nonlinear Thinkers

A conceptual interface for Dreamers, not Prompters

“We are each of us a multitude of ideas, spinning through thought-space like stars. Why flatten them, when we can navigate them?”

— Carl Sagan

Some Humans are dreamers.

Using Chat interfaces, these dreamers switch between ideas linearly, managing them simultaneously and layering them like a multidimensional matrix.

Most of the public would need a whiteboard and a two-week design sprint to unpack what some can spin up in a couple of hours. Dreamers architect an idea—iterating across disciplines simultaneously—it’s not chaos.

It’s a high-dimensional order.

Modern AI interfaces are failing the people who think beyond the scroll, the dreamers.

Tools like ChatGPT—while revolutionary—enforce a linear, serialized format for interaction. But not all minds work that way. Some thinkers don’t just move forward—they orbit, branch, collapse, and expand ideas in real time. They form mental constellations, not chat logs.

This is not a flaw in the user. It’s a limitation in the interface.

The Problem: Cognitive Compression by Linear UI

In today’s ChatBot systems:

Every idea must fit inside a single forward-moving timeline.

There is no spatial reasoning.

No semantic grouping.

No way to return to a prior node and fork a concept cleanly.

If your mind is nonlinear—if you hold 5, 10, or 20 active concepts simultaneously—you’re forced to flatten your process, one turn at a time.

But what if you didn’t have to?

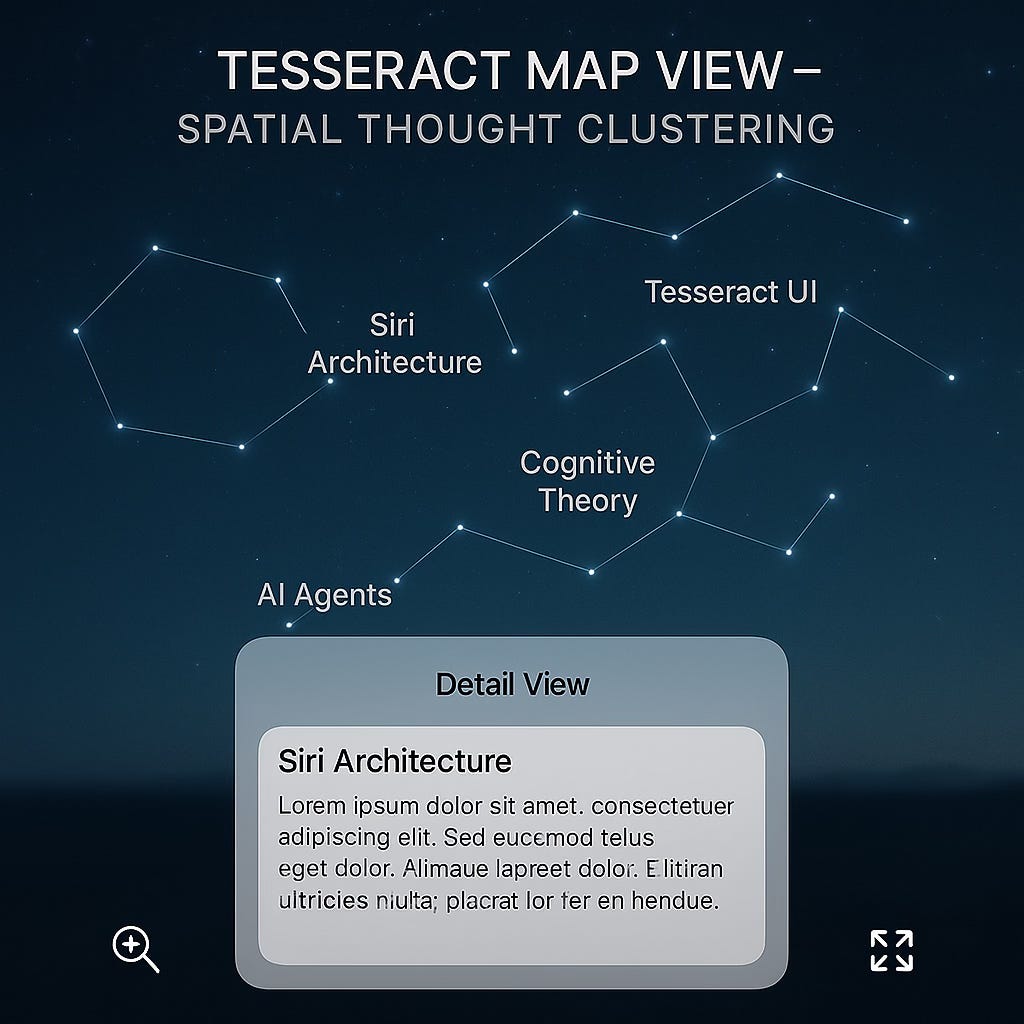

The Vision: Tesseract XR

Tesseract XR is a conceptual interface for multi-dimensional reasoning and AI collaboration.

It allows nonlinear thinkers to:

Navigate thought spatially, not chronologically

Manipulate, fork, annotate, and expand on bubbles of thought

Think and build with AI using multi-dimensional composition, not thread-based dialogs

Core Concepts

1. Thought Bubbles (Nodes)

Each AI interaction becomes a bubble—a discrete, expandable unit of meaning.

A sub-conversation

A sketch or code snippet

A conceptual fork

These can be stacked, floated, grouped, or minimized in 3D/VR space.

2. Spatial Layout Instead of Chat Scroll

Users don’t scroll. They zoom, pan, cluster, or orbit their ideas.

Organize by theme, not time

Surface what matters now, hide what doesn’t

Revisit older branches with full context

3. Dimensional Layering

Ideas have depth:

Immediate reply layer

Linked research layer

Sub-thought trails (e.g., alternatives you explored before committing)

Personal notes or revisions

Each layer is nested and collapsible like a hypercube.

4. VR/AR Compatibility

Drag your concept map into a spatial workspace:

Walk through thought structures

Gesture to expand nodes

Pin floating concepts in different parts of the room

This isn’t gimmick—it’s cognitive prosthetics for parallel reasoning.

Why It Matters

This isn’t just about convenience. It’s about aligning interface with how some minds work:

If your thoughts unfold like a graph, not a list...

If you build systems across disciplines and time scales...

If you work in abstraction, metaphor, prototype, and architecture all at once...

Then you don’t need a chatbot. You need a dimensional interface for multi-threaded cognition.

You need Tesseract XR.

A Theoretical Use Case: Nonlinear Dialog in a Chat System

Imagine a user engaged in a long-form chat with an LLM. But rather than sticking to one subject, the user naturally pivots between diverse topics:

Discussing Siri's modular AI architecture

Shifting to Apple’s WWDC design language

Injecting speculative fiction about dimensional UI

Inserting a technical tangent about LLM fallback routing

Proposing an open-source diagramming plugin

A traditional chat UI would linearize this, making revisiting or organizing thoughts painful.

But in Tesseract XR, each of those pivots becomes a discrete bubble:

The Siri topic forks into architecture, UX, and platform politics

The tesseract metaphor links to a spatial layout view

The fallback-routing bubble is tagged “system design” and annotated with sketches

The user could zoom out literally pull the text from the page and expand it into a bubble The LLM doesn’t lose context—but now the user doesn’t either.

What’s been conversationally possible is now visually and structurally intuitive.

A concept but one that could become a reality

This is currently a conceptual framework. But it can be built right now using:

Graph-based LLM memory systems

VR frontends (e.g., Meta Quest, Apple Vision)

Embeddable UI components with WebXR, React, or Unity

Local or cloud-based LLMs for core reasoning support

This doesn’t require agents in the traditional sense—it just requires a new interface layer that treats thoughts as modular and spatial.